This is part two in a series of posts that look at the marketing information available in your web site log reports. I am using the terminology used by the Web Trends Log Analyzer software. Other software uses similar terminology.

Unless there is a specific problem I’m researching, when I look at web log reports I go directly to the information that has the highest ROI. This is what I ,ook at:

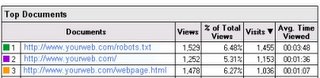

Top Documents – This is list of the most frequently accessed pages on your web site. Below you see the top three pages listed in a Web Trends report.

The Top Documents listing tells you how many times the page was viewed and how many unique visitors there were. The number of views will always be higher than the number of visits. Someone who visits a page twice will be counter as two views, but just one visitor.

This listing also shows the average amount of time a visitor has the page displayed on their monitor. These times can give you a rough idea of how much of the information on the page is being read. This information is very useful when combined with information from other lists, as I’ll describe shortly.

For my web sites the robots.txt file is usually at the top of the list. This file is used to tell search engine spiders how you want them to interact with your web site.

The other page I always expect to find at the top of the listings is the home page.

Top Entry Pages – This list shows the pages most frequently used to enter your web site. These are the pages that provide the first impression for your web site. These are very likely the most important pages on your web site. I focus a major portion of my efforts on these pages. They need to have a clear, concise, compelling message so that visitors quickly understand what you are selling/offering and why they should read more. You typically have less than ten seconds to capture the visitor’s attention and convince them your web site is worth their time.

Top Exit Pages – These are the most common last pages viewed just before a visitor leaves your web site. You can expect the robots.txt file to be near the top of the list, and it may be ignored. Your home page will also probably be near the top of the list.

The pages on this list are your last chance to make a good impression before someone leaves your web site. While you may want to keep people on your web site forever, the reality is that everyone has to leave sometime. Look at these pages to determine whether it makes sense for someone to leave through the page, or are they leaving at the beginning or in the middle of a series of pages. If your “thank you for making a purchase” page is near the top of the list, you have an incredible web site. That is a very appropriate exit page. However, in most cases the “thank you” page will not even make the list.

Single Access Pages – These are pages people use to enter your web site, however, they only look at this one page and then leave. Compare this list with the “Top Documents” list to find out how long people are looking at these pages. If the times are very short, people are most likely looking for something other than what the page offers. You should investigate why people are coming to the page and where they are coming from. If the times are longer (ten seconds or greater), there are three options:

1) The page may be offering what they want in general, but they are not finding the specific information they want, or they don’t know what to do next. You may need to look at improving navigation or your call to action.

2) The page is offering exactly what they wanted, their question was answered so they left. If your goal is to answer visitor’s questions, you can then classify this page as effective. For example, if this is a page with contact information about your company, most likely the visitor got the phone number or address they needed and then they left.

3) The page is poorly written, or the information is presented in a confusing fashion. You may need to look at your page design and copywriting.

Top Paths Through Site – This provides a list of the most common series of pages people viewed while visiting your web site. A path may have a single page, or multiple pages. This gives you an idea of what people did during their visit.

Each of the lists also includes a percentage that gives the percentage of total visitors each page represents. This is significant information that helps you judge the relative importance of the rankings. A page at the top of the Single Access list, that has 1% of your traffic is much less important than a Single Access page that has 10% of the visitors.

These five lists provide a wealth of information about what is happening on your web site. Combine the information from several lists, looking at pages that appear on more thanone list, to get a better understanding of how visitors are reacting to your web pages. For example, if some of your Top Documents are also the top Single Access pages, then you have a lot of wasted traffic visiting your web site--people are visiting and then leaving.

Keep in mind that as you make changes to you web site the pages on these list will change. The pages that appear on these lists will also change as a result of compeditive changes and changes in the market. You need to be reviewing you web logs regularly and taking action on the information the provide.